MantisBT - Zandronum |

| View Issue Details |

|

| ID | Project | Category | View Status | Date Submitted | Last Update |

| 0003334 | Zandronum | [All Projects] Bug | public | 2017-11-08 00:52 | 2025-08-08 23:00 |

|

| Reporter | Leonard | |

| Assigned To | Kaminsky | |

| Priority | high | Severity | major | Reproducibility | always |

| Status | assigned | Resolution | open | |

| Platform | | OS | | OS Version | |

| Product Version | 3.0 | |

| Target Version | 3.3 | Fixed in Version | | |

|

| Summary | 0003334: Tickrate discrepancies between clients/servers |

| Description | This issue was first described by unknownna here: 0002859:0015907.

In this summary, all issues are addressed by 0003314, 0003317 and 0003316.

All but one issue:

Quote from unknownna

* Desyncs a little consistently every 24-25 seconds.

Turns out this is a little off though, the exact period is 28 seconds.

When clients start on both Linux/Windows, a timing method is selected: either a timing event fired by the OS can be created and Zandronum will use it or time polling is used instead.

Unfortunately, as described in this thread from the ZDoom forums, Windows timing events are only precise down to the milliseconds and as such only a delay of 28ms can be achieved due to rounding.

Zandronum servers on the other hand always use time polling and, as a way of dealing with this issue, use a tickrate fixed at 35.75hz.

lNewTics = static_cast<LONG> ( lNowTime / (( 1.0 / (double)35.75 ) * 1000.0 ) );

Can you spot the problem here?

As described in the linked thread, a time step of exactly 28ms would theoritically result in a frequency of (1000 / 28) = 35.714285714...hz.

Using a tickrate of 35.75 instead results in a time step of (1000 / 35.75) = 27.972027972ms.

Calculating the difference and dividing the client time step by it (i.e. magnifying that difference until it equals one time step) and we get, you guessed it, 28 seconds.

(28 / (28 - (1000 / 35.75))) = 1001 tics or 28 seconds.

This means that after 28 seconds, the server will essentially be ahead of us by 1 tic.

The prediction will account for this and as such clients won't notice a thing but outside spectators sure will.

Attached is a demo of the desync occuring by using cl_predict_players false (28ms.cld).

By making the server loop use a proper time step of 28ms, the issue is fixed:

lNewTics = static_cast<LONG> ( lNowTime / 28.0 );

However a problem still remains: the windows timing method is the ONLY one that has a time step of 28ms.

If for some reason the creation of a timer failed at start, Windows will use time polling which will obviously be a proper 35hz.

Linux timer events are precise down to the microseconds as reported in the thread and will have proper 35hz both for polling and timing events.

As such, even with a fixed time step of 28ms on the server, clients using a proper 35hz tickrate will experience a desync too and a much worse one at that.

Calculating the difference again, etc...:

((1000 / 35) / ((1000 / 35) - 28)) = 50 tics.

Only 50 tics and these clients will appear to jitter to outside observers.

I will attach a demo of this with cl_predict_players false as well (35hz.cld).

The solution is obviously to enforce a consistent tickrate in every case but which one?

Should we force the time step to be 28ms globally or should we simply disable Windows timer events and use 35hz on the servers and be done with it?

In my opinion, we should simply disable Windows timer events and here are my arguments on why:

* The clients never slept to begin with if cl_capfps is off.

* As per 0001633, the timer events are already disabled on linux for clients due to being faulty.

* Proper 35hz.

I'm not sure but doesn't the fact that the tickrate is not a proper 35hz mean that mods that would rely on it being correct experience time drift?

For example, a mod uses a timer in ACS that's supposed to count time at 35hz, does it keep track of the time correctly?

I haven't really checked this so I'm not sure but that could be a thing.

* If we want to look at some sort of standard, Quake 3 uses time polling for both its clients and servers and, just like Zandronum, only the servers sleep. |

| Steps To Reproduce | Using the test map referenced in 0002859:0018627:

-host a server on MAP01

-join a client with cl_predict_players 0

-wait at least 28 seconds

-you will notice stuttering for about a second or so, this is what outside observers actually see

You may also use the debug output given by the linked commit, it will show the desync happening in real time (the server messages arriving at different times and the prediction suddenly having to do 2 ticks even though this is a local connection). |

| Additional Information | The demos were both recorded on the same test map described in the steps to reproduce. |

| Tags | No tags attached. |

| Relationships | | related to | 0001633 | resolved | Leonard | [Linux x86_64] Multiplayer game completely broken | | parent of | 0002859 | resolved | Leonard | Gametic-based unlagged seemingly goes out of sync often compared to ping-based unlagged | | related to | 0002491 | needs testing | Leonard | Screenshot exploit | | related to | 0003418 | resolved | Leonard | (3.1 alpha) Stuttering ingame | | related to | 0001971 | confirmed | Torr Samaho | Floors don't reset properly online for clients | | related to | 0002294 | feedback | | Very evident misprediction of movement on raising floors in general |

|

| Attached Files |  28ms.cld (86,629) 2017-11-08 00:52 28ms.cld (86,629) 2017-11-08 00:52

/tracker/file_download.php?file_id=2273&type=bug

35hz.cld (112,668) 2017-11-08 00:57 35hz.cld (112,668) 2017-11-08 00:57

/tracker/file_download.php?file_id=2274&type=bug

jack.cld (62,779) 2018-02-11 19:45 jack.cld (62,779) 2018-02-11 19:45

/tracker/file_download.php?file_id=2302&type=bug

jitter.cld (78,600) 2018-02-11 19:45 jitter.cld (78,600) 2018-02-11 19:45

/tracker/file_download.php?file_id=2303&type=bug

unlagged_debug_03.wad (13,507) 2024-03-09 02:49 unlagged_debug_03.wad (13,507) 2024-03-09 02:49

/tracker/file_download.php?file_id=2923&type=bug

tickissue.png (6,440) 2024-11-11 17:41 tickissue.png (6,440) 2024-11-11 17:41

/tracker/file_download.php?file_id=3168&type=bug

tickissue_better.png (25,511) 2024-11-12 18:16 tickissue_better.png (25,511) 2024-11-12 18:16

/tracker/file_download.php?file_id=3169&type=bug

|

|

| Issue History |

| Date Modified | Username | Field | Change |

| 2017-11-08 00:52 | Leonard | New Issue | |

| 2017-11-08 00:52 | Leonard | Status | new => assigned |

| 2017-11-08 00:52 | Leonard | Assigned To | => Leonard |

| 2017-11-08 00:52 | Leonard | File Added: 28ms.cld | |

| 2017-11-08 00:53 | Leonard | Description Updated | bug_revision_view_page.php?rev_id=11315#r11315 |

| 2017-11-08 00:57 | Leonard | File Added: 35hz.cld | |

| 2017-11-08 00:59 | Leonard | Relationship added | parent of 0002859 |

| 2017-11-08 10:54 | Edward-san | Note Added: 0018816 | |

| 2017-11-08 17:26 | Leonard | Note Added: 0018818 | |

| 2017-11-08 20:25 | Edward-san | Note Added: 0018820 | |

| 2017-11-10 02:26 | Ru5tK1ng | Note Added: 0018850 | |

| 2017-11-10 18:33 | Leonard | Note Added: 0018851 | |

| 2017-11-10 18:33 | Leonard | Status | assigned => needs review |

| 2017-11-10 20:17 | Blzut3 | Note Added: 0018852 | |

| 2017-11-10 21:11 | Blzut3 | Note Edited: 0018852 | bug_revision_view_page.php?bugnote_id=18852#r11327 |

| 2017-11-11 01:19 | Leonard | Note Added: 0018853 | |

| 2017-11-11 01:19 | Leonard | Description Updated | bug_revision_view_page.php?rev_id=11328#r11328 |

| 2017-11-13 09:16 | Leonard | Relationship added | related to 0001633 |

| 2017-11-13 14:08 | Leonard | Status | needs review => assigned |

| 2018-01-23 01:47 | Ru5tK1ng | Note Added: 0019006 | |

| 2018-01-23 20:04 | Leonard | Note Added: 0019011 | |

| 2018-01-24 01:40 | Ru5tK1ng | Note Added: 0019012 | |

| 2018-01-24 01:40 | Ru5tK1ng | Note Edited: 0019012 | bug_revision_view_page.php?bugnote_id=19012#r11382 |

| 2018-02-11 19:45 | Leonard | File Added: jack.cld | |

| 2018-02-11 19:45 | Leonard | File Added: jitter.cld | |

| 2018-02-11 19:46 | Leonard | Note Added: 0019031 | |

| 2018-03-25 15:19 | Leonard | Note Added: 0019158 | |

| 2018-03-25 15:19 | Leonard | Status | assigned => needs review |

| 2018-03-25 15:22 | Leonard | Relationship added | related to 0002491 |

| 2018-04-30 15:47 | Leonard | Note Added: 0019177 | |

| 2018-04-30 15:47 | Leonard | Status | needs review => needs testing |

| 2018-05-02 16:05 | Leonard | Relationship added | related to 0003418 |

| 2018-05-07 02:07 | StrikerMan780 | Note Added: 0019211 | |

| 2018-05-07 02:08 | StrikerMan780 | Note Edited: 0019211 | bug_revision_view_page.php?bugnote_id=19211#r11522 |

| 2018-05-07 03:09 | StrikerMan780 | Note Edited: 0019211 | bug_revision_view_page.php?bugnote_id=19211#r11523 |

| 2018-05-07 05:58 | StrikerMan780 | Note Edited: 0019211 | bug_revision_view_page.php?bugnote_id=19211#r11524 |

| 2018-05-07 05:58 | StrikerMan780 | Note Edited: 0019211 | bug_revision_view_page.php?bugnote_id=19211#r11525 |

| 2018-05-07 09:46 | Leonard | Note Added: 0019215 | |

| 2018-06-03 22:39 | StrikerMan780 | Note Added: 0019267 | |

| 2018-06-03 22:39 | StrikerMan780 | Note Edited: 0019267 | bug_revision_view_page.php?bugnote_id=19267#r11567 |

| 2024-03-06 12:27 | unknownna | Note Added: 0023304 | |

| 2024-03-06 12:58 | unknownna | Note Edited: 0023304 | bug_revision_view_page.php?bugnote_id=23304#r14105 |

| 2024-03-06 16:05 | unknownna | Note Edited: 0023304 | bug_revision_view_page.php?bugnote_id=23304#r14106 |

| 2024-03-07 09:40 | unknownna | Note Edited: 0023304 | bug_revision_view_page.php?bugnote_id=23304#r14107 |

| 2024-03-07 22:38 | unknownna | Note Edited: 0023304 | bug_revision_view_page.php?bugnote_id=23304#r14108 |

| 2024-03-07 22:39 | unknownna | Status | needs testing => feedback |

| 2024-03-09 02:49 | unknownna | Note Edited: 0023304 | bug_revision_view_page.php?bugnote_id=23304#r14109 |

| 2024-03-09 02:49 | unknownna | File Added: unlagged_debug_03.wad | |

| 2024-03-12 05:17 | unknownna | Priority | normal => high |

| 2024-03-12 11:49 | unknownna | Note Edited: 0023304 | bug_revision_view_page.php?bugnote_id=23304#r14124 |

| 2024-03-12 15:13 | Kaminsky | Note Added: 0023377 | |

| 2024-03-13 14:08 | unknownna | Note Added: 0023381 | |

| 2024-03-14 06:22 | unknownna | Note Edited: 0023381 | bug_revision_view_page.php?bugnote_id=23381#r14128 |

| 2024-03-14 08:19 | unknownna | Note Edited: 0023381 | bug_revision_view_page.php?bugnote_id=23381#r14131 |

| 2024-03-14 08:24 | unknownna | Note Edited: 0023381 | bug_revision_view_page.php?bugnote_id=23381#r14132 |

| 2024-03-14 08:26 | unknownna | Note Edited: 0023381 | bug_revision_view_page.php?bugnote_id=23381#r14133 |

| 2024-04-03 10:01 | unknownna | Note Edited: 0023381 | bug_revision_view_page.php?bugnote_id=23381#r14154 |

| 2024-04-06 00:22 | Kaminsky | Note Added: 0023521 | |

| 2024-04-06 00:22 | Kaminsky | Assigned To | Leonard => Kaminsky |

| 2024-04-06 00:22 | Kaminsky | Status | feedback => assigned |

| 2024-04-06 00:22 | Kaminsky | Target Version | 3.1 => 3.2 |

| 2024-04-06 10:00 | unknownna | Note Added: 0023523 | |

| 2024-06-08 15:43 | Kaminsky | Note Added: 0023756 | |

| 2024-06-08 18:01 | unknownna | Note Added: 0023757 | |

| 2024-06-08 18:01 | unknownna | Status | assigned => feedback |

| 2024-06-09 08:31 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14256 |

| 2024-06-09 08:42 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14257 |

| 2024-06-09 08:49 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14258 |

| 2024-06-09 08:58 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14259 |

| 2024-06-09 18:21 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14260 |

| 2024-06-12 14:13 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14261 |

| 2024-06-13 13:50 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14262 |

| 2024-06-13 14:23 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14263 |

| 2024-06-13 14:31 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14264 |

| 2024-06-13 14:40 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14265 |

| 2024-06-13 14:41 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14266 |

| 2024-06-13 20:41 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14267 |

| 2024-06-16 14:31 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14270 |

| 2024-06-16 17:21 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14273 |

| 2024-06-16 19:31 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14274 |

| 2024-06-17 14:22 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14275 |

| 2024-06-24 18:46 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14278 |

| 2024-06-24 19:28 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14279 |

| 2024-06-24 21:46 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14280 |

| 2024-06-26 17:21 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14287 |

| 2024-06-29 22:49 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14288 |

| 2024-06-29 22:55 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14289 |

| 2024-06-29 23:14 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14290 |

| 2024-07-03 04:17 | unknownna | Note Edited: 0023757 | bug_revision_view_page.php?bugnote_id=23757#r14297 |

| 2024-09-24 22:19 | unknownna | Note Added: 0024050 | |

| 2024-10-19 23:14 | unknownna | Note Edited: 0024050 | bug_revision_view_page.php?bugnote_id=24050#r14410 |

| 2024-10-22 05:44 | unknownna | Note Edited: 0024050 | bug_revision_view_page.php?bugnote_id=24050#r14413 |

| 2024-11-10 21:39 | Kaminsky | Note Added: 0024130 | |

| 2024-11-10 21:39 | Kaminsky | Status | feedback => needs review |

| 2024-11-10 21:44 | Kaminsky | Note Edited: 0024130 | bug_revision_view_page.php?bugnote_id=24130#r14431 |

| 2024-11-10 23:55 | unknownna | Note Added: 0024135 | |

| 2024-11-11 16:45 | Kaminsky | Note Added: 0024136 | |

| 2024-11-11 17:13 | Kaminsky | Note Edited: 0024136 | bug_revision_view_page.php?bugnote_id=24136#r14433 |

| 2024-11-11 17:39 | unknownna | Note Added: 0024137 | |

| 2024-11-11 17:41 | unknownna | File Added: tickissue.png | |

| 2024-11-11 23:58 | unknownna | Note Edited: 0024137 | bug_revision_view_page.php?bugnote_id=24137#r14435 |

| 2024-11-12 00:15 | unknownna | Note Edited: 0024137 | bug_revision_view_page.php?bugnote_id=24137#r14436 |

| 2024-11-12 18:16 | unknownna | File Added: tickissue_better.png | |

| 2024-11-15 04:01 | unknownna | Note Added: 0024138 | |

| 2024-11-15 05:02 | unknownna | Note Edited: 0024138 | bug_revision_view_page.php?bugnote_id=24138#r14438 |

| 2024-11-15 17:51 | Kaminsky | Note Added: 0024144 | |

| 2024-11-15 20:04 | unknownna | Note Added: 0024147 | |

| 2024-11-17 08:17 | unknownna | Note Edited: 0024147 | bug_revision_view_page.php?bugnote_id=24147#r14440 |

| 2024-11-23 23:07 | unknownna | Note Edited: 0024147 | bug_revision_view_page.php?bugnote_id=24147#r14441 |

| 2024-11-26 05:14 | unknownna | Note Edited: 0024147 | bug_revision_view_page.php?bugnote_id=24147#r14444 |

| 2024-11-27 09:15 | unknownna | Relationship added | related to 0001971 |

| 2025-02-15 23:07 | unknownna | Relationship added | related to 0002294 |

| 2025-03-18 18:57 | Kaminsky | Note Added: 0024256 | |

| 2025-03-18 18:57 | Kaminsky | Status | needs review => assigned |

| 2025-03-18 18:57 | Kaminsky | Target Version | 3.2 => |

| 2025-08-08 23:00 | Kaminsky | Target Version | => 3.3 |

|

Notes |

|

|

|

Just for curiosity: did you check also if GZDoom multiplayer has this desync problem?

AFAIR some years ago, when I, on Ubuntu 64, tested with a Windows user, there weren't issues like that. |

|

|

|

|

No, I didn't check GZDoom.

Quote from Edward-san

there weren't issues like that.

I'm assuming you're also talking about GZDoom here?

My guess is that given the nature of GZDoom's multiplayer it literally doesn't matter: everyone connected experience the worst latency so even on a lan a different tickrate would just mean a slight latency (0.5ms) for the Windows user who is ticking faster.

If you're talking about Zandronum then bear in mind the client does not see this for itself and even if you hosted on Linux, the server still uses the same loop so you would still only notice the other user's jitter every 28 seconds. |

|

|

|

|

Quote

I'm assuming you're also talking about GZDoom here?

Correct. |

|

|

|

|

|

It seems to make more sense and consistency to disable Windows timer events. I would say just begin working to implement 35hz. |

|

|

|

|

PR.

This is for proper 35hz.

If for some reason we end up going for 28ms though I could always edit it later. |

|

|

|

(0018852)

|

|

Blzut3

|

2017-11-10 20:17

(edited on: 2017-11-10 21:11) |

|

I'm confused on "The clients never slept to begin with, why bother with a timer?" The difference between the polled and event timer should be whether Zandronum uses 100% CPU or not with cl_capfps on (I think vid_vsync would be affected too but not certain). If disabling the event timer does indeed cause 100% CPU usage expect a lot of angry laptop users.

On a related note, I believe 3.0 got the updated Linux timer and should be fine now modulo the issue you're trying to solve.

Edit: Regarding the ACS timing point. It has been too long but the last time I looked at the 35.75Hz issue I did notice some mods were correcting for the round off. So some mods are going to be wrong with either choice, but it really doesn't matter unless your hobby is watching timers and looking for drift. :P

|

|

|

|

|

Oh you're right, I didn't check for the I_WaitForTic functions.

Then I guess we loose sleeping with cl_capfps on for Windows. |

|

|

|

|

|

Is the loss of sleep for windows the reason the patch wasn't pulled? |

|

|

|

|

|

The loss of sleep issue was solved, the reason this wasn't addressed yet is because having consistent ticrates (both 35hz and 28ms) revealed a much bigger problem that needs to be addressed first. |

|

|

|

|

For those following this ticket or interested parties, what bigger issue was revealed? I'm assuming 1633 is part of it.

|

|

|

|

|

Sorry for the extremely late reply, I wanted to respond once I had the basic implementation up and running and time played against me again and I kind of forgot to reply.

Quote from Ru5tK1ng

I'm assuming 1633 is part of it.

No.

Quote from Ru5tK1ng

what bigger issue was revealed?

I will try my best to explain this.

In this ticket it was found out that the ticrates between clients/servers differ.

This means that inevitably over time one is going to end up behind the other: after 28 seconds the windows clients are so far behind they loose one tic and at the very moment this occurs, the server's ticking gets closer and closer and that's what causes the "desync".

At this point, when the client and the server's ticking happen almost at the same time, the server seems to alternate between receiving the client's movement commands before and after ticking.

This produces what Alex called the "jackhammering effect" where in the worst case the server consistently processes 2 movement commands (the previous one that was received AFTER ticking and the current one) and then waits the next tic.

Obviously this makes the player extremely jittery.

The problem is that once the ticrates were actually fixed and made consistent across clients/servers, on rare occasions a connecting client would get the jackhammering effect except this time permanently.

This is presumably due to the fact that the clients/servers do not lag behind relative to each other anymore which implies that a client that would tick almost exactly at the same time as its server by "chance" would do so permanently.

I'm not sure what causes it to happen in the first place and the fact that searching for such a problem doesn't seem to give anything useful and that apparently this problem never occurred to any other game engines made me doubt what was even happening.

Even then, I tried to debug further but couldn't find anything useful anymore on this issue.

After that I decided to work on smoothing the ticbuffer which would not only fix (or kludge if it's not something that is supposed to happen) the jackhammering problem but also benefit online play in general.

In particular, I expect this will come close to completely solving 0002491.

The way this works is simple: detect if movement command "drops" occur frequently and if so simply apply a "safe latency" to the processing in such a way that the movement will end up being completely smooth.

I think this is the most logical way to solve such a thing: the jittery player shouldn't even notice a thing as both prediction and unlagged should account for any extra latency except now, instead of being very hard to hit to outside observers, will be much, much smoother.

I attached a demo (jack.cld) in which a player experiences the jackhammering effect followed by the new ticbuffer "regulator" being turned on.

This means that players experiencing this problem will be completely smooth to outside observers regardless but at the expense of having one extra tic of latency.

I also attached a much worse example using gamer's proxy to simulate a jittery player (jitter.cld). |

|

|

|

|

|

Since the ticbuffer smoothing is ready to be reviewed, this needs to be reviewed as well. |

|

|

|

|

|

|

|

(0019211)

|

|

StrikerMan780

|

2018-05-07 02:07

(edited on: 2018-05-07 05:58) |

|

There seems to be an issue with this in the latest 3.1 build. Player and actor movement is no longer interpolated, it looks like it's running at 35fps, even with uncapped framerate. Both online and offline.

Everything else seems fine however.

|

|

|

|

|

|

|

|

|

|

|

|

(0023304)

|

|

unknownna

|

2024-03-06 12:27

(edited on: 2024-03-12 11:49) |

|

Quote from Leonard

on rare occasions a connecting client would get the jackhammering effect except this time permanently.

Hey, was this ever added to 3.1, or looked into any further?

This issue is still present in 3.1. It's especially noticeable if you connect with some latency, for instance with 300 ping emulated.

If you're unlucky and connect to the server at the wrong tick, all the other players will permanently jitter/jackhammer around needlessly. Their player body movements aren't as smooth when you circle-strafe around each other while fighting. It's actually rather bad when it happens.

When the bug occurs, the impression from your POV is that the jittering players move slightly faster all the time due to all the micro-skipping they do.

Additionally, other clients see you as skipping as well. Glitched clients affect both themselves and others on the server.

The only way to fix it is to quit and restart the program and hope that you get better luck upon the next instance of starting the program and joining the server.

To sum it up so far:

* Clients connected with a bad tick sync will visually jitter/jackhammer around to everyone else, and they in turn will perceive everyone else as jittering as well.

* Clients connected with a good tick sync will see other clients with good tick syncs moving smoothly.

* To fix it, you have to quit and restart the program and hope for the best upon connecting to the server again.

* Because of this desync, you effectively have 2 different positions of the other clients flickering back and forth that you can hit successfully with a hitscan weapon.

And to add to the final point, because of this permanent desync and flickering of 2 different positions rapidly, it could explain this issue:

Quote from unknownna

The unlagged seems to act odd when firing immediately upon passing and bumping into another player close by, allowing you to seemingly hit behind the player in thin air and still hit.

Another effect of this is that shots with a wide spread like the SSG aimed at the center of your target are more likely to miss some pellets the further away the target is, because the target is rapidly bouncing back and forth between 2 points. Players are thus potentially harder to hit the further away they are from you in Zandronum.

I updated Leonard's example wad to easily reproduce the issue and also gave it Quake 3 hit sounds for target checks and made the hitbox sprites more intuitive. There are 2 pistols, one fast like the chaingun, and one slow like the shotgun. Connect 2 clients to the server and use an emulated ping of 300 on one client to easily see the issue when it occurs. You can 100% hit the flickering position that is incorrect, and you even have a dead zone in the middle of the 2 positions where no pellets hit. It's most definitely bugged.

I tested various timings to use when joining the server immediately after starting the server exe.

Joining after 6-7 seconds seems to not be enough to trigger the issue. But any later than that and it almost seems to have something to do with the time being an even or odd number, like there's some rounding error that causes the player positions to fluctuate between 2 tics permanently. It's 1 tic off when it glitches, I'd say.

'https://web.archive.org/web/20181107022429/http://www.mine-control.com:80/zack/timesync/timesync.html [^]'

Maybe this link has some information that can help? I can't really do anything more from here until someone looks into it further.

|

|

|

|

|

|

|

|

(0023381)

|

|

unknownna

|

2024-03-13 14:08

(edited on: 2024-04-03 10:01) |

|

Thanks for that! I tested the latest Q-Zandronum 1.4.11, which I assume has the fix, and it's much worse there, and the unlagged is extremely broken. The client-side movement prediction of your own player also jitters a lot. Whatever it's doing is not the correct way at the moment.

Back to regular Zandronum, turning the tic buffer off doesn't improve the issue, so it seems to be something more fundamental going on.

With 2 clients standing still on the scrolling floor, with one client using 300 emulated ping, older versions of Zandronum (1.0, 1.1, 1.3, 1.2.2, 2.1.2 and 3.0) seem to be very smooth until 16 seconds pass, at which it starts to jitter for 13-14 seconds before correcting itself and normalizing again, and it loops like that over and over again. This time loop is 100% consistent.

The permanent time desync jitter starts to appear in 3.1 after the fixes.

So it's a time drift issue after all, but Q-Zandronum's fix is not the correct one since it glitches it out even further.

Quote from unknownna

older versions of Zandronum (1.0, 1.1, 1.3, 1.2.2, 2.1.2 and 3.0) seem to be very smooth until 16 seconds pass, at which it starts to jitter for 13-14 seconds before correcting itself and normalizing again, and it loops like that over and over again. This time loop is 100% consistent.

After testing this further, it means that prior to 3.1, the unlagged position of your target would be shifted forward every 16 seconds, and flicker rapidly between 2 points for 13-14 seconds until it normalized. Because of this, You could hit even further ahead of the player than before when these 16 seconds passed. And due to it already being 1 tic off because of a separate issue, it meant aiming extra far ahead to land a hit. The unlagged would break every 16 seconds in older versions of Zandronum.

The difference is that it's now permanent, and it's very likely to break often for incoming clients. It's not too fun to play the game in its current state, just saying.

|

|

|

|

|

Thanks a lot for your detailed analysis, and for testing the aforementioned commit from Q-Zandronum! I was hoping that Q-Zandronum's approach was good enough and we could easily backport it.

I started working on adding time synchronization using the information in the link you posted in'https://zandronum.com/tracker/view.php?id=3334#c23304 [^]' recently. It's not 100% complete, and I haven't had an opportunity to thoroughly test it to see if it fixes the issues you mentioned. I'm afraid that I won't have the fix ready for the next 3.2 beta that might come in a few days, but I'll be happy to share test builds with you afterwards. |

|

|

|

|

|

Incredible, looking forward to it! |

|

|

|

|

Hello, I'm finally back with an update:

After spending a lot of time trying to sync the times between the server and client (via'https://web.archive.org/web/20181107022429/http://www.mine-control.com:80/zack/timesync/timesync.html [^]'), this didn't help fix the stuttering for me. At best, it might've helped reduce it a bit (but could break when the client's ping fluctuated), and at worst, it made the stuttering more severe than when the times weren't synced. So, it seems that syncing the client's clock to the server's isn't a good solution to fixing this issue.

This brings me to another solution: In 3.1-alpha, I worked on the skip correction, a server-sided feature that extrapolated the movement of players when their commands didn't arrive on the server's end normally (i.e. during packet loss or ping spikes). The idea was to predict where the player was moving using their last processed move command. When the late move commands finally arrive, the server "backtracked" the player's movement, meaning that it restored the player's position, velocity, and orientation to what they were before extrapolation, then processed all late move commands instantly. This meant that the player could move smoothly for others, even when they're lagging, without their ticbuffer overflowing with late commands which caused them to skip.

While the feature itself already exists in Zandronum, there were lots of unwanted side effects that caused problems for players who tested the 3.1-alpha builds. I disabled the feature entirely in the stable release, so you can only use it in test builds. I intended to fix these side effects and improve the skip correction for 3.2 so that the feature can finally be used as it should be. Looking at it now, I also think it would be nice if the skip correction wasn't only server-sided, and clients could use it for themselves in case the stuttering only happens for them.

To enable the skip correction for yourself, host a 3.2-alpha server and set "sv_smoothplayers" anywhere between 1-3 (the value represents the number of ticks the server will extrapolate the player for). When using the example WAD and following the steps outlined in'https://zandronum.com/tracker/view.php?id=3334#c23304, [^]' enabling the skip correction did fix the stuttering for me (maybe not perfectly, but certainly better than without it). Let me know how it works for you. |

|

|

|

(0023757)

|

|

unknownna

|

2024-06-08 18:01

(edited on: 2024-07-03 04:17) |

|

Hey, and thanks for the update. Appreciate it.

At first it made no difference to me, but after some more thorough testing, here's my findings:

* sv_smoothplayers 1 fixes the issue for the most part when you observe a client that jitters between 2 ticks, but only if you connect with a good tick sync on top of that.

However, there still seems to be some timing issue here, and if your tick sync is slightly off, it'll still flicker a bit permanently. If you restart the .exe and get a good tick sync however, it's incredibly good and like a dream.

* sv_smoothplayers 1 works partially when observing a client that jitters between 3 ticks. Without it enabled, you have 3 different positions the client flickers back and forth between that you can hit. Setting it to 1 reduces these 3 positions to 2.

Setting it to to 2 or 3 makes no difference from 1 and is no improvement. This is the glitch and flickering that absolutely can't be fixed unless the .exe is restarted.

These were the only 2 issues I noticed. Sometimes you for some reason get a client that jitters between 3 ticks instead of 2.

And the only way to get a better tick sync is to restart the .exe. Nothing else will do, no matter how many times you try to disconnect and reconnect from the console.

I think there's still room for some improvement here. If we can somehow get the gameplay to always be like "sv_smoothplayers 1 + a good tick sync" it would play like a dream.

Edit:

What all this tells me is that, since you can get lucky and get a perfect sync without using the tic buffer, is that every time a client .exe starts, it "hits" the server anywhere between 3 ticks upon connecting:

1st tick: Completely smooth if it lands right. Unlagged is perfect since there's no jitter and flickering between different ticks.

2nd tick: Clients flicker between 2 ticks, sv_smoothplayers 1 can fix this if your client hits exactly at 2nd tick. However, if the offset is slightly between the 1st and 3rd tick, it still flickers, although much less.

3rd tick: Clients flicker between 3 ticks, sv_smoothplayers 1 can reduce the 3 ticks to 2, but can't fix it completely at the moment. And I think this 3rd tick issue can maybe only happen if the other client also is synced badly to the server.

And for some reason I couldn't fix it with sv_smoothplayers 1 when testing it again today, until I closed the other client and restarted the .exe on it. It's such a weird issue. It's as if each client has its own sync to the server, and because they're synced at different tick offsets, the settings that work in one way won't work when the tick offsets are different the next time.

One problem with sv_smoothplayers 1 however is that it causes client prediction errors locally.

Edit2:

Another observation: A client's cl_capfps value makes a big difference. A client with cl_capfps set to 1 will not experience the same amount of jitter compared to a client with uncapped FPS. The unlagged can for instance appear as being perfect with cl_capfps set to 1 and you don't have multiple targets that flicker, but when you turn it off, the other client you observe suddently starts to jitter between 2 points that you can hit compared to before. The unlagged behavior breaks even worse with uncapped FPS.

I personally always play with cl_capfps set to 1, so I didn't notice this until now.

And when you get a bad sync, your client seems to affect the server, which means that bots see you flickering back and forth as well, and thus their aim is very shaky when fighting them.

And you can use cl_predict_players 0 combined with cl_capfps 0 and the chasecam to see yourself jitter.

For some reason the ping makes a difference. Using an emulated ping of 160, if I connect with a lucky sync that works for 160 ping, changing the ping lower or higher makes it jitter worse.

When you get a good lucky sync, it's pretty much as smooth as offline play, especially with cl_capfps 1. You can for instance bump into players and not jitter like you normally would.

In addition, clients playing with cl_capfps set to 0 will actually jitter more to other players observing them.

Edit3:

Quote from unknownna

For some reason the ping makes a difference. Using an emulated ping of 160, if I connect with a lucky sync that works for 160 ping, changing the ping lower or higher makes it jitter worse.

The jitter seems to harmonize with every 50-60ms of ping added or subtracted, give or take.

For a sync that works for 160 ping, these pings will be just as smooth for the most part:

40: Good and smooth.

100: Good and smooth.

210: Good and smooth.

270: Good and smooth.

325: Good and smooth.

380: Good and smooth.

440: Good and smooth.

More specifically, after testing more, I think the exact value might be 56ms for adding and 57ms for subtracting.

So for a good lucky sync at 160 ping, these pings seem to be as smooth:

46: Good and smooth. -57ms

103: Good and smooth. -57ms

160: Baseline.

216: Good and smooth. +56ms

272: Good and smooth. +56ms

328: Good and smooth. +56ms

384: Good and smooth. +56ms

440: Good and smooth. +56ms

It must be the Windows timer of 28ms x 2 = 56ms, with 1 extra ms for subtracting.

It seems that with the way things are working right now, the Windows timer isn't playing nice with the server timer from the looks of it, and there might be some relativity problem on top of it, where an incremental unit of time used for syncing initially, doesn't occur at the same time between the client and server, though I'm not sure about the latter. I think I've tested and mapped out the issue as good as I can for now. I'd like to hear your thoughts from here.

Quote from Leonard

I attached a demo (jack.cld) in which a player experiences the jackhammering effect followed by the new ticbuffer "regulator" being turned on.

This means that players experiencing this problem will be completely smooth to outside observers regardless but at the expense of having one extra tic of latency.

Is this the way things are working at the moment? Not only does the intended behavior not seem work at all from my testing and is actually worse than 3.0, but this forced latency should be unacceptable, as 1 tic of difference at Doom's level of speed is the difference between your player successfully moving and hiding behind a wall or getting hit by a SSG shot.

Another observation: Going into fullscreen mode with Alt+Enter after having gotten a good tick sync at 160 ping in windowed mode with cl_predict_players 0 and cl_capfps 0 causes your player and other clients you observe to start to jitter. For some reason the tick sync gets worse when entering fullscreen mode from windowed mode. And it's a bad and nasty jitter that can't be dialed out by altering the ping, it's absolutely horrendous. Oversizing the window without going into fullscreen also introduces additional minor jitter, but not nearly as much as when entering fullscreen mode. This means that fullscreen players are at a disadvantage against windowed players since they will jitter more permanently.

After looking around for a while, I found some excellent links with discussions on the tick offset issue, and they also talk about the clock sync algorithm found in the link I posted earlier:

'https://gamedev.stackexchange.com/questions/206851/how-to-sync-ticksframes-between-two-peers [^]'

'https://gamedev.stackexchange.com/questions/18766/network-client-server-message-exchange-and-clock-synchronization-help?rq=1 [^]'

'https://gamedev.stackexchange.com/questions/164367/clock-synchronization?rq=1 [^]'

'https://gamedev.stackexchange.com/questions/55508/start-timer-in-two-clients-the-same-time/55515#55515 [^]'

I hope it could help you.

Edit4:

Quote from Kaminsky

So, it seems that syncing the client's clock to the server's isn't a good solution to fixing this issue.

If syncing the clocks initially by hopefully making them tick at the same offset wasn't enough to fix the issue, then we must perhaps offset the client's gametic by "x" amount of milliseconds, where x is how many milliseconds the client actually started to tick behind the server in real-life time.

Since the jitter harmonizes with 56ms, and never seems to be off by more than 3 ticks, then we can maybe assume that each tick is 28ms long in duration, and multiply it by 3 to get 84ms. This is how far off the client's ticking can be at worst.

This means that the client's ticking offset in relation to the server is off by anywhere between 0ms (perfect) to 84ms (lots of jitter) depending on when the ticker synchronizes with the server by chance when starting the .exe.

The question is, how do we make the client and server aware of how far off it is, even though they think they are aligned? How can the client or server detect the flicker/jitter and attempt to correct and re-sync it?

From my current perspective, this problem has nothing to do with ping spikes, nothing to do with dropped packets, nothing to do with extrapolating/interpolating other players, and nothing to do with the tic buffer. It has everything to do with each client's ticking offset being off by some milliseconds compared to the server's.

I think that trying to fix this by extrapolating is maybe something of a hack and will also not work since each client has a different gametic or clock offset to the server, which means that one setting will only work by chance on some offsets and not on others.

If each client could somehow correct and re-sync their own ticking offset towards the server's, then I believe the problem would go away naturally. As long as each client knows they're synced towards a certain offset, and other clients on their own ends are also synced towards the same offset, they should all naturally appear smooth and centered to one another.

Really, from my perspective it seems to boil down to these 3 points:

* Every client's gametic or clock tick offset is off by 0ms-84ms in real-life time, even if they tick "at the same time" in the code. Being able to experience and enjoy smooth gameplay is a matter of chance currently.

* Fullscreen and oversized windowed mode compared to regular windowed mode introduces additional permanent jitter unrelated to the above.

* The tic buffer "regulator" is not doing what it's supposed to do. It might not even be needed if the fundamental issue is fixed first.

In fact, I also believe that a lot of the complaints of a "weak SSG" in Skulltag throughout the years can actually be traced back to this exact issue.

Because of the tick offset desyncs between all clients and the server, they all flicker/jitter and effectively rapidly teleport between 2 points, making shots much harder to hit the further away a player is moving sideways from you. Fixing this would "magically" make the SSG feel a lot stronger as more pellets would actually hit the now-centered targets instead of missing, trust me.

Some additional links for reading:

'https://gamedev.net/forums/topic/696756-command-frames-and-tick-synchronization/5378847/ [^]'

'https://gamedev.net/forums/topic/652377-network-tick-rates/5126249/ [^]'

'https://gamedev.net/forums/topic/706887-clientserver-sync-reasoning-and-specific-techniques/5426971/ [^]'

'https://www.gamedev.net/forums/topic/704579-need-help-understanding-tick-sync-tick-offset/5416883/ [^]'

'https://y4pp.wordpress.com/2014/06/04/online-multiplayer-proof-of-concept/#part2 [^]'

'https://gamedev.net/forums/topic/707830-clientserver-clock-sync-issue-confirmation-and-solutions/5430541/ [^]'

'https://devforum.roblox.com/t/do-not-use-high-precision-clock-syncing-tech-between-clients-and-server-with-accuracy-of-1ms/769346 [^]'

'https://devforum.roblox.com/t/how-do-you-sync-server-tick-and-client-tick/440508/3 [^]'

'https://devforum.roblox.com/t/could-someone-please-explain-the-timesyncmanager-module-made-by-quenty/426057/3 [^]'

|

|

|

|

(0024050)

|

|

unknownna

|

2024-09-24 22:19

(edited on: 2024-10-22 05:44) |

|

Something interesting was observed with the new "connection strength" feature on the scoreboard in 3.2. If you get a good tick sync to the server, you will always have a good 4/4 connection strength to the server. If you get a bad tick offset sync however, the client will appear as having a terrible connection of 1/4 strength.

So knowing that, if you quit and restart the client .exe until you get a good tick sync that syncs good at 160 ping, all the pings listed in my note above will always show a 4/4 connection strength, whereas any ping subtracted by 28ms will yield a dramatically diminished connection strength of 1/4.

But every 56ms apart yields a strong connection of 4/4.

There's a clear reproducible pattern at play here, we just need to figure out what causes it.

It's very important that you check this with "cl_capfps 0" "chase" and "cl_predict_players 0" all combined so you can observe your own client jitter and skip around while moving. I recommend binding them to keys for easy and quick toggling.

Kaminsky, when you attempted to sync the clocks, did you only do it a few times at start upon connecting, or did it you do it all the time? From what I've read in all the links posted in my note above, the time syncing should only occur a few times at start and no more after that. It's just important to line up the client and server ticking so they don't hit at the wrong offset relative to one another.

I also suspect that the tick offset desync could be causing packet-loss issues for certain users, such as:

'https://zandronum.com/tracker/view.php?id=1469#c22933 [^]'

'https://zandronum.com/tracker/view.php?id=4415 [^]'

if it's caused by some asymmetric-like connection caused by the tick offset being off between the client and server, leading to one side of the offset always dropping off further apart the center than the other, causing more missed packets on one side of the offset.

Edit:

I did another experiment, to see which pings I would land on that "synced" good to the server when a ping of 160 didn't sync.

At first I set the ping emulator to 160 and attempted to tweak and match it to the server by incrementing it by 1 and checking the client movement afterwards. When I found the ping that made the player move as smooth as possible, I left the ping emulator at that value before quitting and restarting the .exe. The goal was to get a good sync at 160 ping.

The way to test this quickly is to add the ping emulator port as a custom Zandronum server in the server browser so you can connect to the server through it over and over again when it doesn't sync properly. Leave "cl_capfps" "cl_predict_players" both at 0 and quickly flip into chasecam mode when connected to observe your own client's movement. If the player movement is smooth, it's a "good sync".

* 1st attempt: 156 or 212, smooth every 56ms apart.

* 2nd attempt: Impossible to get a good sync, all pings jittered. Closest was 181 ping only, but still some jitter.

* 3rd attempt: 184 ping or 240, smooth every 56ms apart.

* 4th attempt: 156 or 212 again.

* 5th attempt: Seemingly impossible to get a good sync again. Closest was 182-186 range, but still jitter.

* 6th attempt: Impossible to get a good sync again. Closest seemed to be 155 or 211 only, but still some jitter.

* 7th attempt: 178-179 or 234-235, very little jitter sporadically.

* 8th attempt: Impossible again, 181-183 range closest to smooth, but still jitter.

* 9th attempt: 180-181 or or 236-237, but still some sporadic jitter.

* 10th attempt: Impossible again, 182-184 range smoothest, but still lots of jitter.

* 11th attempt: Impossible again, 181 smoothest, still jitter.

* 12th attempt: Impossible again.

And then I became frustrated and suspicious as to why I never got a sync at 160 ping, and attempted to revert the ping emulator back to 160 everytime instead of leaving it at the former setting before restarting the .exe and connecting to the server again. This seemed to change it up a little and made it work for what I intended.

* 1st attempt: Synced badly at 160, was smooth at 180. Revert ping emulator back to 160.

* 2nd attempt: Synced badly at 160, was smooth at 155. Revert ping emulator back to 160.

* 3rd attempt: Synced badly at 160, was smoothest at 153, but still some jitter. Revert ping emulator back to 160.

* 4th attempt: Synced badly at 160, was smooth at 180. Revert ping emulator back to 160.

* 5th attempt: Finally synced great at 160.

What this makes me wonder is, does the server assume the client connects with a certain ping, and assumes that the client will never deviate from it somehow?

Maybe my assumption about the whole issue is completely wrong, I just hope I'm not sending you on a wild goose chase with no end in sight with the time and tick syncing. Maybe it has nothing to do with time at all, and has to do with the server assuming something wrong at some point about the client's ping?

Sometimes you can seemingly never get a good "sync" to the ticking, or whatever the heck the real problem is.

It almost feels like there's always 2 instances of the player running around, and if one of them is out of sync with the other, it jitters. It almost feels like one of these 2 instances land "between" ticks, where it's never quite smooth no matter how much you try to tweak your ping to it.

When you get the jitter and the client stops sending CLC_CLIENTMOVE commands, the client will many times keep displaying the running animation while standing still after you release the move buttons. This is made much worse by having cl_ticsperupdate set to 2 or 3.

Why on earth are the movement commands dropped, but not the +attack and +use commands, or any other commands? All the other buttons seem to be instantly responsive, it's just the movement commands that are dropped. You never for instance fire any NULL rockets or anything like that, so maybe it's not a tick sync issue? Maybe there's just some fundamental bug with the way the player movement is handled on the server? The server assumes something wrong about the client movment for any given ping?

There's almost got to be something wrong with the way the server handles the movement commands in relation to the client's ping upon connecting to the server, and the client's ping after being connected, like the way the movement commands drop often if it's 1 tick (28ms) off, but smooth again if 2 ticks (56ms) off.

Edit2:

I repeated the same 2nd experiment with different pings, to see which latencies the sync would land onto when connecting to the server.

Connect with a ping of 140, see which pings sync best, revert back to 140 every time before restarting .exe:

* 1st: 122, smooth every 56ms apart.

* 2nd: Impossible, lots of jitter, client landed between ticks.

* 3rd: 127

* 4th: 128

* 5th: 127

* 6th: 156

* 7th: 127

* 8th: 158

* 9th: 158

* 10th: 122

It seemed to land on different pings compared to what I usually get when leaving the ping emulator at 160. The good syncs seemed to hit much lower in general when connecting with a ping of 140, in the 120s mostly. And in retrospect after having done the other experiments below, it seems to have landed less between ticks.

Connect with a ping of 200, see which pings sync best, revert back to 200 before restarting .exe:

* 1st: 160

* 2nd: 126

* 3rd: Closest was 153, still jitter, client landed between ticks.

* 4th: Closest was 157-158, still minor jitter, client landed between ticks.

* 5th: Closest was 127, jitter, client landed between ticks.

* 6th: 157

* 7th: 157-158

* 8th: Closest was 123-126, still jitter, client landed between ticks.

* 9th: Impossible, much jitter at any ping, client landed between ticks.

* 10th: Closest was 156, but still sporadic jitter, client landed between ticks.

The client seemed to land between ticks more often when using a ping of 200 to connect to the server, where I couldn't tweak the ping to match the sync towards the server's ticking.

Connect with a ping of 0, see which pings sync best, revert to 0 before restarting .exe:

* 1st: 156

* 2nd: Closest was 181-183, but still jitter, client landed between ticks.

* 3rd: 125

* 4th: 156

* 5th: Closest was 155, but still jitter, client landed between ticks.

* 6th: 126

* 7th: Impossible, jitter at all pings, client landed between ticks.

* 8th: 156

* 9th: Closest was 155, but still jitter, client landed between ticks.

* 10th: 157-158

This seemed to land a bit lower as well, and never at 160, but at 156-158 instead.

And then I attempted the same thing, but this time with "cl_startasspectator 0", just to test it. Connect with a ping of 0, see which pings sync best, revert to 0 before restarting .exe:

* 1st: 159

* 2nd: Closest was 155, still jitter, client landed between ticks.

* 3rd: Closest was 154-156, still sporadic jitter, client landed between ticks.

* 4th: 158-159

* 5th: Got a rare, never-before sync that lasted from 126-130 with minor jitter.

* 6th: Impossible, much jitter, client landed between ticks.

* 7th: Impossible, much jitter, client landed between ticks.

* 8th: Got the same rare sync at 126-130 with minor sporadic jitter.

* 9th: Closest was 123-124, but still lots of jitter, client landed between ticks.

* 10th: Got a rare sync all the way from 120-135 with very minor jitter.

Using "cl_startasspectator 0" seemed to change up the sync a little as well compared to the other testing conditions. I got some rare syncs that lasted for longer distances between ticks.

Ignoring the "fullscreen jitter" issue I found earlier, there seems to be 2 main issues, the 1st issue being where the client might land on a wrong tick offset (between ticks) compared to the server's, and thus can never become properly in sync with it no matter what. The 2nd issue is where the server can only guarantee smooth movement for the client every other tick (56ms) apart from the ticking. Any duration between 1-28ms away from every other tick will cause the jitter to appear and lots of movement commands to be dropped.

|

|

|

|

(0024130)

|

|

Kaminsky

|

2024-11-10 21:39

(edited on: 2024-11-10 21:44) |

|

I finally have an update for this ticket. First and foremost, thank you so much for your detailed analysis and investigation into this issue! Sorry for the very late response.

I will say that I've been tackling this issue for about a month now, and after lots of debugging and testing, I concluded that the SNTP approach mentioned and linked above wasn't going to work to fix the stuttering. Simply put, there's too much variance (e.g. latency) and factors involved that made the approach ineffective. Furthermore, suddenly offsetting the client's clock by a huge amount in an attempt to synchronize it to the server's created many problems, and possibly more side effects than I was aware of.

I'll list two major reasons why the permanent stuttering was happening:

1. Networking isn't perfect, so sometimes a client or server's packets arrive at their destination a bit too soon, or too late.

2. Unlike the server, whose ticks are generally consistent (28-29 ms in length), the client's ticks tend to fluctuate a lot more, depending on their current frame rate (e.g. 25-32 ms in length). Rendering the game contributes significantly to those fluctuations. Some ticks are inevitably shorter or longer than others, and this affects when the client sends out commands to the server.

With these in mind, I realized that for all intents and purposes, we shouldn't be concerned with trying to synchronize the client's clock to the server's clock. In fact, doing this actually made the stuttering worse than it was before, even for clients with very low pings. What we should be doing instead is adjusting the client's clock just enough so that their commands arrive roughly in the middle of a server's tick (i.e. not too soon after the tick started, and not too late before the next tick starts). This way, even if their commands arrive sooner or later than expected because of the two reasons mentioned above, then at least they'll still arrive more consistently on the server's end. These adjustments to the client's clock are only a few milliseconds and are relatively safe to do. Thus, I put this theory to the test and pushed a new topic to the repository:'https://foss.heptapod.net/zandronum/zandronum-stable/-/tree/topic/default/client-stutter-fix [^]'

Here's a detailed step-by-step description of what's happening now:

1. When the client connects, instead of the server telling the client to authenticate their map right away, it tells them to begin adjusting their clock. It sends a SVCC_BEGINCLOCKADJUSTMENT command so that the client changes its connection state to CTS_ADJUSTINGCLOCK.

2. For each tick while the client's connection state is CTS_ADJUSTINGCLOCK, it will send the server a CLCC_SENDCLOCKUPDATE command to the server.

3A. When the server receives a CLCC_SENDCLOCKUPDATE command from the client, it saves the time it processed this command in CLIENT_s::lastClockUpdateTime.

3B. The server also saves the times it called G_Ticker in the current and previous ticks, and uses these epochs to determine how soon and late within a tick did the CLCC_SENDCLOCKUPDATE command arrive at.

4A. After receiving 10 of these commands, the server has enough samples to reasonably determine if the client's commands are arriving somewhere in the middle. It checks for any outliers (i.e. any commands that arrived less than 7 ms after or before the previous/current G_Ticker calls).

4B. If any outliers were found, the server will instruct the client to offset their clock accordingly, then repeat steps 2-4.

4C. If no outliers were found (the clock adjustment completed successfully), or if after 20 attempts at telling the client to offset their clock there's still outliers (at which point we just abort the clock adjustment), the server tells the client to authenticate their map, like normal.

The caveat here is that it might take longer for clients to fully connect to a server. However, this should only take up to a few seconds, usually no more than 10 seconds in extreme cases. I'd say that this extra time is worth it, if the client's packets arrive more consistently now. From the testing I did so far, the extra time it took to connect was often around 1-3 seconds if my ping was no greater than 120. For 250+ ping, it took maybe around 5-10 seconds.

I tried testing this thoroughly, both on a LAN server with a ping emulator (and using all kinds of different pings) and a remote server, and every single time I tested it, the client's commands arrived consistently on the server's end and they were no longer stuttering, assuming that they weren't also suffering from severe ping spikes or packet loss. While all of this looks promising, I still haven't had an opportunity to get it tested with, or by, someone else. This still needs a lot of testing before I can conclude if it works well enough, which is why I haven't created a merge request for it yet.

I'd really appreciate it if you can download the the auto-generated build(s) on Heptapod and test it thoroughly yourself. Feel free to provide any feedback, if possible. If everything seems to be working properly now, I might move ahead with creating that merge request. Thanks again for your dedication and effort!

|

|

|

|

|

Hey, thanks for the update and the test build, much appreciated!

I tested it a bit and made some notes below:

* The client lands on a wrong offset upon starting the .exe. Although this couldn't be fixed without restarting the .exe before, I noticed in the test build that it could be improved somewhat when using 2 clients to observe each other. One client appeared as smooth and centered to the other client after both reconnected to the server after several attempts. But I was unable to reproduce the improvement in another subsequent session.

I also couldn't improve the client's own sync to the server by reconnecting. The offset seems to be latched to some time offset set maybe deeper in the Windows OS? I did some searching and found out that Windows changed some timer stuff starting from Windows 10, build 1809. Maybe this is part of the reason why it glitches when starting the .exe?

'https://www.overclock.net/threads/best-timer-resolution.1811992/?post_id=29362766#post-29362766 [^]'

'https://community.pcgamingwiki.com/files/file/2563-global-timer-resolution-requests-windows-11/ [^]'

'https://answers.microsoft.com/en-us/windows/forum/all/queryperformancefrequency-returns-10mhz-on-windows/44946807-5355-4b36-ba3e-43aa86ce30c0 [^]'

* It still starts to lag between every 56ms. Every 56ms apart has the same smoothness, but when the ping drifts up/down closer to 28ms apart it causes the client to drop commands and jitter a lot. This is also observed by other clients, and can be observed through the connection strength feature on the scoreboard. The server isn't accounting for movement commands that are supposed to arrive in that one missing tick between 56ms.

* The fullscreen / oversized window jitter also remains. It's map-dependent (for example MAP07 in idl201x_a.wad), maps with lots of scrolling textures and whatnot are affected. This means that it will start to jitter even if the client has a good sync towards the server. This jitter is also observed by other clients looking at your client. So, because you lag locally with "cl_capfps 0", other clients, even with "cl_capfps 1" on their end, will see you skip around.

* The way the client perceives its own sync towards the server WILL AFFECT OTHER CLIENTS observing it.

Why can't the client improve its own sync towards the server by reconnecting? Why does it still start to jitter when the ping moves away from every other tick, i.e., between 56ms?

Would it be possible to allow the client to sync the clock in the background while in the game? It takes a bit long indeed to connect to the server now. Maybe it could also be synced during intermission screens if needed, though I'm not sure if this is needed yet.

I think it still needs to be improved upon a bit before it's good enough, it doesn't feel like it fixes the issues yet, but there's a glimmer of hope here. Permanent desyncs still occur after connecting to the server. I feel like there's maybe some deeper issue at play here besides the time syncing, like there's some Windows OS timing issue. Does changing the server tickrate to 28ms from 35Hz fix or improve the issue for Windows users?

I appreciate you looking into this, and hope we can figure something out. It would be so incredible and amazing if we could fix this. |

|

|

|

(0024136)

|

|

Kaminsky

|

2024-11-11 16:45

(edited on: 2024-11-11 17:13) |

|

Hi, thanks a lot for your response and feedback.

Quote from "unknownna"

The offset seems to be latched to some time offset set maybe deeper in the Windows OS? I did some searching and found out that Windows changed some timer stuff starting from Windows 10, build 1809. Maybe this is part of the reason why it glitches when starting the .exe?

According to the links that you provided, that change in the timer since Windows 10 build 1809 would affect applications that didn't explicitly set a timer resolution via timeStartPeriod. However, GZDoom and Zandronum already do this when the application is started up:

// Set the timer to be as accurate as possible

if (timeGetDevCaps (&tc, sizeof(tc)) != TIMERR_NOERROR)

TimerPeriod = 1; // Assume minimum resolution of 1 ms

else

TimerPeriod = tc.wPeriodMin;

timeBeginPeriod (TimerPeriod);

This is setting the resolution as low as possible (which will depend on your OS and hardware). So, as far as I can tell, the timer change in the aforementioned Windows build shouldn't be an issue. Is your PC, by chance, using a power-saving mode or some other setting that would make the minimum resolution higher than 1 ms? I can confirm that on my PC, the resolution is 1 ms.

Quote from "unknownna"

Why can't the client improve its own sync towards the server by reconnecting?

Are you really sure that reconnecting to the server didn't improve anything, or am I missing something? Every time the client leaves a network game, it resets its clock offset back to zero, and their clock gets re-adjusted the next time they connect to the server. So, no matter what ping you connect with, it should always adjust your clock enough to make it smooth.

At least from my own experience, if I drifted the client's ping by 28 ms while in-game to cause bad stuttering, then reconnected to the server with the same ping, it fixed the stuttering.

Quote from "unknownna"

Why does it still start to jitter when the ping moves away from every other tick, i.e., between 56ms?

Remember that the objective of the fix is to make sure that the client's commands arrive as close to the middle of a server tick (usually 28-29 ms) as possible. This is still dependent on the client's own ping. This means that during connection, the client's clock is adjusted according to whatever ping they have during that period.

If you increase or decrease the client's RTT (round-trip time) by 28 ms while in-game, then their commands will now arrive 14 ms sooner or later on the server's end because that is half of their RTT. This means that instead of arriving within the middle of the server's tick (which is what we want), they're now arriving around the same time as the server ticks (which is what we don't want). Due to fluctuations in ping and tick periods on the client's end that I mentioned earlier, the client's commands start arriving inconsistently (again), causing the stuttering to relapse.

However, if their RTT increases or decreases by 56 ms, then their commands will arrive 28 ms on the server's end. Even though their ping changed, the commands still arrive around the middle of the server's tick, which is what we still want. Thus, they don't stutter.

To be fair, I designed this fix with the assumption that the client's ping, on average, remains stable during connection and while they're in the game. They might occassionally experience ping fluctuations or spikes that cause stuttering, but these are only temporary occurrences and their ping should return back to normal. I could try re-syncing their clock in the background while they're in the game, but this adds a whole new layer of complexity that I'm not sure is worth doing. Not to mention, adjusting the clock is best done before the client fully connects to the server when rendering isn't involved, and the traffic between the client and server is pretty low. How realistic is it that the client's ping might drastically change permanently than what it was during connection?

Quote from "unknownna"

The fullscreen / oversized window jitter also remains. It's map-dependent (for example MAP07 in idl201x_a.wad), maps with lots of scrolling textures and whatnot are affected. This means that it will start to jitter even if the client has a good sync towards the server. This jitter is also observed by other clients looking at your client. So, because you lag locally with "cl_capfps 0", other clients, even with "cl_capfps 1" on their end, will see you skip around.

The client's frame rate can affect the rate at which they send out commands to the server. If it's low enough, they might not be sending out commands every 28-29 ms as they should. Unfortunately, this is going to be tough to address. I don't know yet if handling this on a separate thread might improve it, or if it won't work.

Quote from "unknownna"

The way the client perceives its own sync towards the server WILL AFFECT OTHER CLIENTS observing it.

I apologize, but can you elaborate a bit more on this statement? I don't think I quite understand what you mean by the client "perceiving its own sync towards the server".

Thanks again for the feedback!

|

|

|

|

(0024137)

|

|

unknownna

|

2024-11-11 17:39

(edited on: 2024-11-12 00:15) |

|

Quote from Kaminsky

Are you really sure that reconnecting to the server didn't improve anything, or am I missing something? Every time the client leaves a network game, it resets its clock offset back to zero, and their clock gets re-adjusted the next time they connect to the server. So, no matter what ping you connect with, it should adjust their clock accordingly.

I find that it can in rare cases improve the sync marginally, but still not seem to fix it. It feels like it doesn't shift the offset enough or something.

Quote from Kaminsky

Is your PC, by chance, using a power-saving mode or some other setting that would make the minimum resolution higher than 1 ms? I can confirm that on my PC, the resolution is 1 ms.

I'm using an older laptop that's plugged into a power source. The power settings on Windows are set to "best performance", and the battery is set to "recommended". Flipping the battery setting to "high performance" doesn't seem to change it up.

Quote from Kaminsky

The client's frame rate can affect the rate at which they send out commands to the server. If it's low enough, they might not be sending out commands every 28-29 ms as they should. Unfortunately, this is going to be tough to address. I don't know yet if handling this on a separate thread might improve it, or if it won't work.

What I find strange is that this only happens online. Why does the client stutter on certain maps online only? It's not like the specs on my laptop can't handle the novel map. It feels like some bug caused by undefined behavior, similar to issue 0000459 (and also the same map even).

I find it strange that you don't seem to get any issues whereas I seem to get them all the time for the most part. The sync is still terrible for PvP modes in its current state, and I think that's why it died off. Players get bad or good syncs by chance, and on top of that, if their ping happens to land on a spot that's between ticks, it stutters like there's no tomorrow. It was actually better pre-3.1 because the "blind spot" only lasted for 13-14 seconds (28ms / 2 = 14) until it normalized, and then you could hit the player properly again for at least 16-17 seconds (35Hz / 2 = 17.5) until it cycled to the same "blind spot" again and looped.

Quote from Kaminsky

If you increase or decrease the client's RTT (round-trip time) by 28 ms while in-game, then their commands will now arrive 14 ms sooner or later on the server's end because that is half of their RTT. This means that instead of arriving within the middle of the server's tick (which is what we want), they're now arriving around the same time as the server ticks (which is what we don't want). Due to fluctuations in ping and tick periods on the client's end that I mentioned earlier, the client's commands start arriving inconsistently (again), causing the stuttering to relapse.

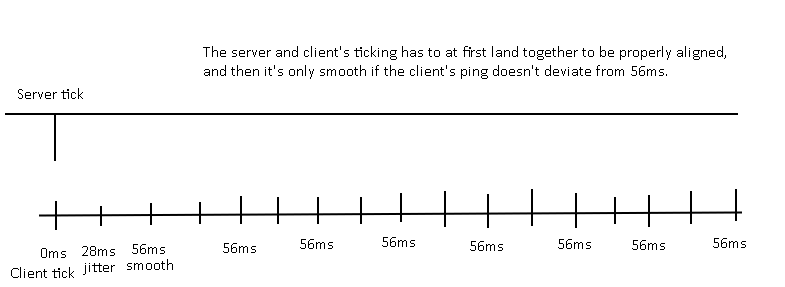

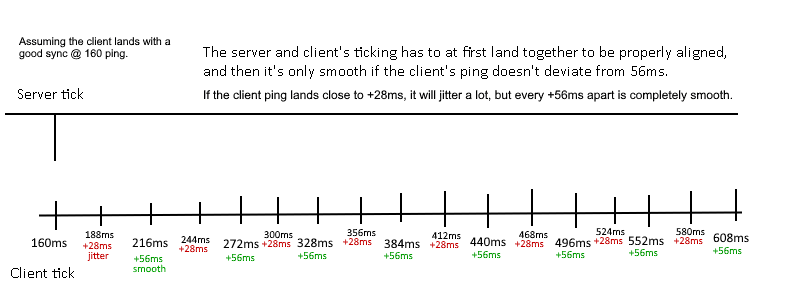

I'm sorry, but I think we're talking about different things here and misunderstanding each other. To me, it seems that the 35Hz tickrate is causing the server to expect commands from the client when the client can't deliver them, because the tickrates are different between them. There's a "blind spot" where it will lag no matter what. IF the client by some unlucky chance lands on that "blind spot" with its ping, it will jitter horribly to everyone on the server. The client prediction masks this for the client itself, but turning it off reveals the severe stutter. The offending client will in turn perceive everyone else as stuttering too. I uploaded a crude illustration of how I perceive the issue.

If possible, I'd like to request a test build with the following:

* Commands to manually offset the time upwards or downwards, to see if it helps with shifting the client's ticking offset when it lands between the server's ticking.

* An option to flick the tickrate between 28ms and 35Hz to see if it improves the issues for me. It might fix the "blind spot between every 56ms" issue.

Can you absolutely confirm to me that you never get any stuttering and jitter when playing with "cl_capfps 0", "cl_predict_players 0" and "chase" all combined at the same time? Does your player move completely smooth always, or does it jitter and stutter around? I almost never get a good sync, and have to restart the .exe until I do.

What does the "gametic-lowtic" error mean? I got it once when reconnecting.

Quote from Kaminsky

I apologize, but can you elaborate a bit more on this statement? I don't think I quite understand what you mean by the client "perceiving its own sync towards the server"

If you play with "cl_capfps 0", "cl_predict_players 0" and "chase" to observe how good your client's sync is, you will appear as stuttering to other smooth clients if you stutter locally. Does that make any sense?

Quote from unknownna

I find that it can in rare cases improve the sync marginally, but still not seem to fix it. It feels like it doesn't shift the offset enough or something.

I can confirm that it can actually almost fix the issue if you're lucky after reconnecting, but it happens very rarely. It seems that shifting the client's time by x amount of milliseconds is the way to go to align it towards the server's ticking, but it doesn't fix or account for the "blind spot between every 56ms" issue. It might just need to be a bit more aggressive when aligning the time.

|

|

|

|

(0024138)

|

|

unknownna

|

2024-11-15 04:01

(edited on: 2024-11-15 05:02) |

|

Hey, I noticed you made some new updates to this and went a head and tested it early.

Wow, now this is incredible! I'm almost speechless. It seems that you did the impossible, congratulations! Amazing work!

I tested it for a bit now and made some notes below:

* I find that I sometimes had to increase or decrease the time offset by 1-2 to make it sync better in unlagged_debug_03.wad on the fast moving floor, the player would jitter every now and then after a few seconds if it wasn't perfect. If it's not too much to ask, could there also be a command to set the complete offset (setclockoffset) instead of having to manually increment up and down by 1 always? Entering it without any value would print the current offset. It would be interesting to test and see the differences to get a good feel for how the changes affect the sync. Did you also make sure that clients are only allowed to use these commands in debug builds so they can't abuse them?

Subsequent testing reveals that it sometimes has to be shifted more manually to make it land right. I wonder if it needs to be more aggressive as suggested earlier and maybe offset a bit more. Will test more.

* The fullscreen / oversized window issue seems to have been resolved with the new updates. Amazing!

* The client jitter/stutter on certain maps (MAP02, idl201x_a.wad) hasn't been eliminated quite yet, which means that certain maps with lots of scrolling textures and floors moving up and down repeatedly will cause players to jitter slightly. Players playing with uncapped FPS and observing other clients also playing with uncapped FPS will notice it mostly, but it's still a massive improvement over the horrible jitter that was prior. Using the "idmypos" cheat and checking with "cl_predict_players 0" confirms that the client's x/y coordinates shift when it stutters, and the camera also glitches locally when it jitters, especially with "cl_capfps 1". There seems to be some undefined behavior causing this bug and should be looked into. It's especially noticeable when using +jump while changing directions. Is the player's z height sent at full precision like x/y? Do the rapid changes in textures and floor offsets collide with the rate of client-server packets?

* The client spawns temporarily at the wrong place when joining the game after "changemap" map changes. It can also lead to a "connection interrupted" message that lasts for a while. I think the client erroneously inherits its position from the former map.

* I find that it still stutters a very tiny bit sometimes in general even with the movebuffer on. It's very minor and trivial though and not really noticeable to others unless the player changes directions during the stutter. Does the stutter occur due to the server deciding to process 2 movement commands in a single tick? I think it happens because the underlying clock offset didn't land right. Adjusting the clock offset seems to make it better and nearly perfect.

* The connection strength feature still shows 1/4 connection very often, which leads me to think it's due to the "blind spot" the client ping lands on due to the server using a timestep of 35Hz, whereas the Windows client can only have a timestep of 28ms. Maybe this makes it land somewhere outside the server tick. Like Leonard stated in the OP:

Quote from Leonard